In today's fast-paced digital landscape, speed is king. Whether you're browsing a website, streaming content, or using a mobile app, waiting even a few extra seconds can feel like an eternity.

Imagine a world where your favorite websites and applications loaded in the blink of an eye, where videos streamed without a stutter, and where online shopping carts never lost their contents. This is the promise of caching.

This blog post will help you to understand how the caching works.

We'll delve into what caching is, why it's crucial to set up this mechanism in your application.

Whether you're a tech enthusiast, a business owner, or simply someone who loves a smooth online experience, this exploration of caching's inner workings will leave you with a newfound appreciation for the magic that happens behind the scenes every time you click, tap, or swipe →.

So, let dive into it!

What is caching?

Caching is the mechanism aimed at enhancing the performance of any kind of application. it’s also viewed as a process of storing and accessing data from a cache.

Dealing with caching is complex, but understanding this concept is absolutely necessary for every developer.

Accessing large data from persistent memories takes a considerable amount of time. As a result, whenever data is retrieved or processed, it should be stored in an efficient memory.

This is where cache enter in action. So what is a cache?

A cache is a software or hardware component aimed at storing data so that the future requests for the same data can be served faster. It can also be viewed as a high-speed data storage layer whose primary purpose is to reduce the need to access slower data storage layers.

To be cost-effective and efficient, caches are usually implemented by using fast access hardware such as RAM (Random Access Memory).

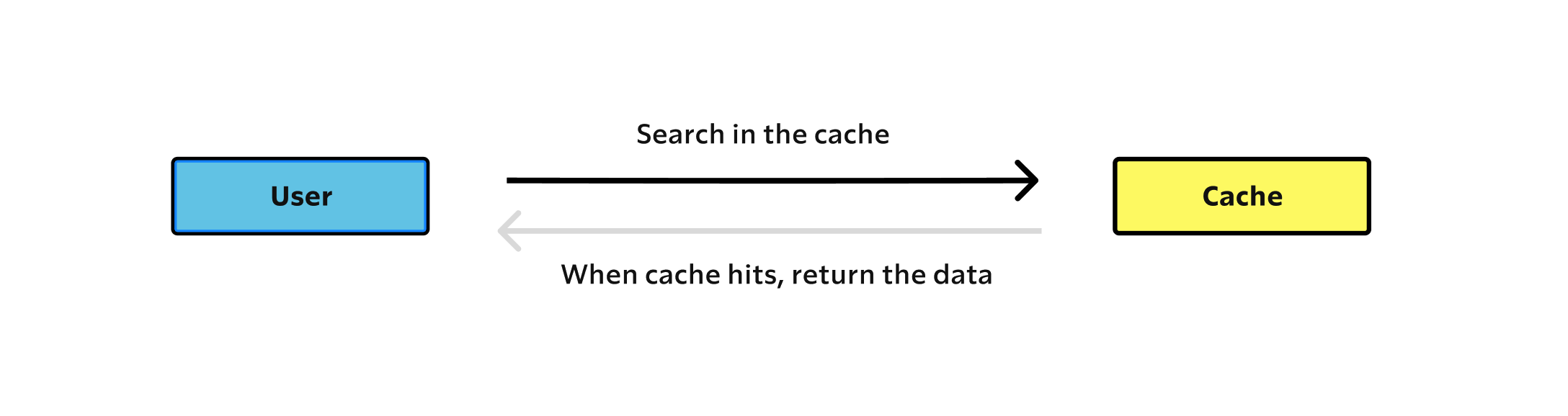

When the request is found in the cache, the cache hit occurs and the request returns data quickly

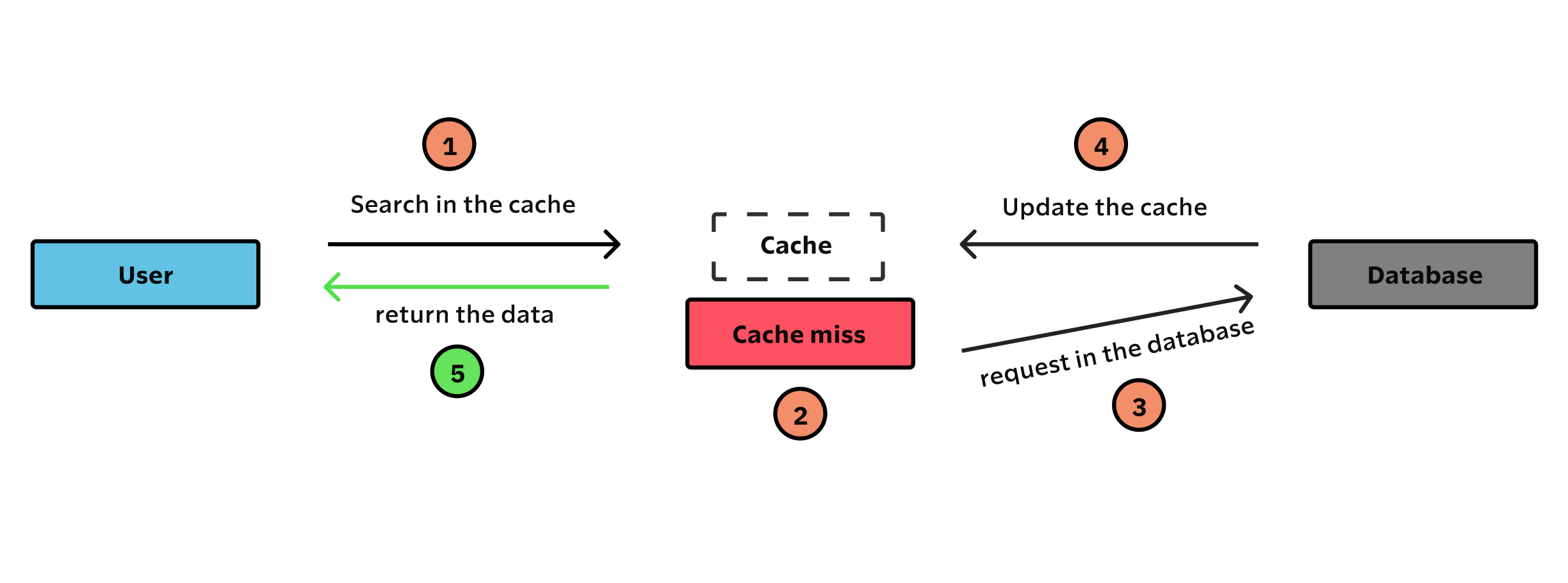

On the other hand, when the request is not found in the cache, the cache miss occurs.

The result of cache miss is latency, since the requested data must be retrieved from the next cache levels or from the main memory.

There are many types of caching, but the most popular ones are the following.

Types of caching

The most used types of caching are:

- In-memory Caching

- Database Caching

- Web Server Caching

- CDN Caching

In-Memory Caching

In this type of caching, data is stored directly in RAM. Memcached and Redis are examples of in-memory caching. The most common implementation of this type of caching is based on key-value databases.

They can be seen as sets of key-value pairs. The key is represented by a unique value, while the value by the cached data.

Database Caching

Each database usually comes with some level of caching. By caching the result of the last queries executed, the database can provide the data previously cached immediately.

This way, for the period of time that the desired cached data is valid, the database can avoid executing queries. Hibernate first level cache is an example of database caching.

Web Caching

Web caching is a mechanism that stores data for reuse, it can be divided into 2 sub categories, Web Server Caching and Web Client Caching.

The mechanism is pretty simple for both cases, It is cached for the first time when a user visits the page. If the user requests the same next time, the cache serves a copy of the page.

CDN Caching

The CDN stands for Content Delivery Network. It is a component used in modern web applications. It improves delivery of the content by replicating common requested files such as web pages, images, stylesheets, scripts, videos, etc. across a distributed set of caching servers.

Furthermore, it can be seen as a system of gateways between the user and the origin server, storing its resources.

What are the benefit of using caching

In a real word scenario, neither users nor developers want applications to take a long time to process requests. As developers, we would be proud of deploying the most performing version of our applications.

And as an end user, we are willing to wait only for a few seconds, and sometimes even milliseconds. In both cases, no one loves wasting their time looking at loading messages.

So, the number one benefit of using this mechanism is speed.

Challenges faced when using caching

Caching is a complex practice, and there are inevitable cons of using it.

Let’s see the most vicious ones.

Coherence Problem — Since whenever data is cached, a copy is created, there are now two copies of the same data.

This means that they can diverge over time. Identifying the best cache update or invalidation mechanism is one of the most difficult challenges related to caching, and perhaps one of the hardest challenges in computer science.

Choosing which Data to be Cached — Virtually any kind of data can be cached. This means that choosing what should reside in our cache and what to exclude is open to endless possibilities.

Thus, it may become a very complex decision. As tackling this problem, there are some aspects to consider, here are the 3 most recurrent ones:

If we expect data to change often, we should not want to cache it for too long. Otherwise, we may offer users inaccurate data. On the other hand, this also depends on how much time we can tolerate stale data.- Our cache should always be ready

to store frequently required data taking a large amount of time to be generated or retrieved. Identifying this data is not an easy task, and you might risk filling our cache with useless data. By caching large data, you may fill your cache very rapidly, or worse, using all available memory. If your RAM is shared between your application and your caching system, this can easily become a problem, which is why you should limit the amount of your RAM reserved for caching.

Dealing with Cache-misses — Cache misses represent the time-based cost of having a cache. In fact, cache misses introducing latencies that would not have been incurred in a system not using caching.

So, to benefit from the speed boost deriving from having a cache, cache misses must be kept relatively lows. In particular, they should be low compared to cache hits. Reaching this result is not easy, and if not achieved, our caching system can turn into nothing more than overhead.

Wrapping up

In conclusion, caching is an essential concept that every developer should understand to improve their application's performance.

By implementing caching, developers can improve their application's speed, but they must also deal with challenges such as the coherence problem, choosing which data to cache, and cache misses.

🔍. Similar posts

How to Delete All Content in a File Using Vim

28 Sep 2025

How to Generate a Git SSH Key on Your Mac and Add it to GitHub

31 Aug 2025

Why Are My React Components Re-rendering Too Much?

26 Jul 2025